Will AI replace white paper writers?

Is there a future for humans to write white papers?

Or is it just a matter of time before AI replaces all white paper writers and the bottom falls out of the market?

Why should you waste your time learning a skill that’s going to be obsolete in a few years?

This article, updated in early 2023, takes a look at this contentious issue.

Where it all began: Little League

AI started by writing short, simple items: Little League baseball stories for local newspapers that couldn’t afford to send a real reporter to the games.

Like an elementary school textbook, these items contain a limited vocabulary and a lot of repetition.

For example, one “creative” decision that baseball-writing software makes is to pick which synonym to use for a victory.

Did the winning team beat, batter, blast, clobber, conquer, crush, defeat, destroy, decimate, dominate, eliminate, eviscerate, flatten, make mincemeat out of, massacre, pound, rout, rule, smash, squash, thump, or terminate the losers?

After this triumphant show of stylistic genius, AI proponents claimed throughout the early 2020s that it was now moving on to longer, more complex projects.

Should white paper writers be concerned? Meh.

Here are seven reasons why I don’t think white paper writers should spend a lot of time worrying about AI, even in the era of ChatGPT.

Not to worry about AI reason #1: ChatGPT says it can’t do long-form content

Go ahead: Ask ChatGPT to write you a white paper.

Every time I ask, it says it can’t. It says only people can write those.

And believe me, I’ve asked.

Not to worry about AI reason #2: White papers are too long and challenging

White papers are long-form content designed to solve a particular marketing problem for an individual company. That company may need to generate leads, get noticed in a crowd, or support a product launch.

White papers are not Little League baseball reports.

White papers are not short e-mails or bland blog posts like ChatGPT can output today.

And white papers are not about public issues like an election or a celebrity breakup, with lots of other coverage an AI could access for input.

White papers are long, custom, research-driven, challenging writing projects.

Who would ask AI to write those, when there are so many simpler projects it can tackle first?

Not to worry about AI reason #3: White papers take deep research

Every white paper takes intense research to find the golden nuggets that prove a certain point.

Can ChatGPT do that?

The AI can certainly access and process a lot of information very quickly. Computers have an edge on humans there, all right.

But can AI select the most compelling evidence from the most respected sources to appeal to a certain type of audience in a certain industry?

And can it create footnotes to credible content, so you know it isn’t just relying on misinformation or making sh*t up?

Can ChatGPT interview company SMEs and pull out their knowledge in a coherent form, even when it’s never been published anywhere?

I don’t think so.

So why not get a writer to do the research? Or let the writer and the AI work as a team, so each can do what they do best?

Let the software run smart searches and dredge up a pile of material, create good-enough summaries, and let the writer pick the best sources to use.

And then ask AI to format the footnotes in your chosen format.

Let it do the mechanical and mind-numbing chores involved in a white paper, while the human writer does the creative and more challenging tasks.

Not to worry about AI reason #4: White papers take rhetorical finesse

An effective white paper must build a compelling argument that runs several thousand words and compels the reader to take the next step in their customer journey.

To convert, in other words.

Could AI do that?

I doubt it. Computers may be logical, but does cold and scientific logic alone convert prospects?

Warm-blooded business people need a skillful rhetorical approach that weaves together facts and logic, anticipates likely objections, and adds a touch of emotion at the perfect spot to finish the argument on a high note.

White papers demand nuanced writing that walks a thin line between explanation and persuasion.

Could AI do that?

I doubt it. More likely they come up with what Bob Bly calls “Google Goulash”—a hodge-podge of search engine results cobbled together with little craft or insight.

Bland, average, C-level content that’s about as good as the dreck published by companies that just don’t get it, or just don’t care. Maybe they’re too small, too local, or too cheap.

To those companies, I say, “Go to it! Get all you can eat for $20 a month!! Chuckle about how much you’re saving!”

But don’t cry to me when a more savvy competitor eats your lunch because they got live human beings to craft content to steal away your customers.

Not to worry about AI reason #5: White papers have to make sense

To prepare the AI programs of today, developers feed them a whole lot of data and then ask them to come up with something original.

So what if someone fed 100 white papers into an AI and asked it to write something original?

Blockchain entrepreneur Clay Space actually tried that.

A few years ago, he fed 100 white papers for cryptocurrency startups into an AI and asked it to write a white paper for a new venture.

The results are hilarious, even after being edited by a human.

Here’s the abstract:

The contents below detail a strategy for creating a mass-market, decentralized supercomputer running a hybrid proof of stake standard utility token on top of a multi-blockchain ICO. This will replace your government.

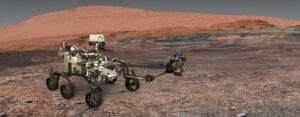

Until ChatGPT, all the AIs I’ve tried have written content that sounded like it was written by a Martian.

White paper readers are not looking for nonsense text or C-grade content that sounds like what they can find everywhere else.

Not to worry about AI reason #6: White papers need more than facts

What if you give ChatGPT more to work with?

What if you ask it to write, section by section so it doesn’t get overwhelmed, a white paper for a certain company in a particular industry with an expressed marketing problem and a defined set of goals?

Do you think it would come up with anything you can use?

Would it have a clue about what the client wants? And the street smarts to suggest what they really need?

Would it have the sensitivity to write any text that touched a human reader’s pain points, made them feel anything, painted any pictures with words, soared and swooped from valley to mountaintop?

I doubt it.

A white paper needs more than hard facts and scientific logic.

To be effective, a white paper needs the human touch.

Not to worry about AI reason #7: Effective white papers will continue to be written by humans for humans

Perhaps, in time, AI will get there.

But when programs start writing white papers, the days of any commercial writing job may be over.

Think about it. What content will AI write first?

Tweets. Landing pages. E-mails. Blog posts. Perhaps web pages.

Then whole websites, with every element of every page tested and refined over months and years.

Then newsletters.

And when all those formats can be written by algorithms, what will be left?

What will be among the last bastions of writing content still being done by humans for humans?

I’m convinced that will likely be white papers.

I’m not worried about ChatGPT stealing my job as a white paper writer.

Before it gets to me, it will have to plow through almost every other writer on Earth.

And by that time, no one may need white papers anyway.

By then, AI may be doing everyone’s job!

What do you think about AI and white papers? Are you worried your job writing them may be replaced by software? Leave us your comments below.

Originally published March 29, 2022. Last updated February 23, 2023.

For more thought-provoking articles like this, subscribe here.

I think a lot of writers are concerned with this recent AI trend (myself included). However, I agree that there is a huge difference between an AI tweaking ad copy to improve conversion rate and the type of work required to create a persuasive white paper.

Gordon and I were discussing this morn, if there ever is a time when AI masters the art of persuasion, humans will be kicked out of so many other societal functions. While it’s easy to see this with a dystopian lens, how might we envision a more positive outcome?

A society where governments have had to stem chaos by implementing a standard basic income? In this world, would people be more able to focus on work that flows from their true potential?

I’m imagining what happens when the bot drops a something that sounds like the dying HAL in A Space Odyssey (“Daisy, Daisy…”) right in the meat of the WP. That’s going to instill confidence.

No doubt humans would review what a machine spit out, but one thing that’s really interesting about AI is that at its highest level even the programmers don’t understand how the algorithm is reaching its conclusions. It’s essentially a black box. This has ethical ramifications, but at the minimum it’s an unlikely recipe for producing any document of nuanced intent.

Here is a sample by a algorithm so powerful the creators won’t release it for fear of repercussions. It’s very impressive. And it would make a convincing, not very good high school paper. Think we’re safe for the moment.

https://openai.com/blog/better-language-models/#sample6

LOL.

Yes. Without knowing how a conclusion is met, would people start using “the algorithm concludes” as a proof?

That is the question isn’t it, Angie? It’s artificial, but is it intelligence?

I studied AI in graduate school, and my employer uses it in the commercial software it sells.

That experience allows me to reinforce Gordon’s points: AI needs a lot of data to “train the model” and then the “model” is only good for that set of data. If you have a new set of data, it has to be retrained.

And what do you get? The ability to guess right in a very narrow problem space. HAL doesn’t exist yet. They had to hire actors (who read scripts by skilled writers) to pull off that act.

The build on the point: Smaller projects with simple formulaic recipes might work. But who has the budget and the I.T. expertise to implement the software, gather the data and train the models? I would think only the largest firms.

I’ll close with a challenge: Would the A.I.-generated copy beat the performance of a trained, A-list copywriter? I’d like to see that competition…